If you’ve looked at your inbox or LinkedIn feed lately, you wouldn’t be blamed for thinking the world is ending or, depending on who you listen to, that it’s being reinvented entirely by algorithms. We are deep in the second inning of the AI revolution, and nowhere is this more chaotic than in the SaaS (Software as a Service) landscape. Every dashboard, spreadsheet app, and calendar tool has slapped an “AI feature” badge on it. But here’s the reality check I’ve gathered from years of watching startups burn money and pivot strategies: AI SaaS platforms are still finding their footing.

We’ve moved past the “Hello World” phase of LLMs (Large Language Models), but we are entering a critical, albeit messy, phase of maturation. As someone who has evaluated dozens of these tools for businesses ranging from scrappy two-person teams to enterprise conglomerates, I can tell you that not all “AI-native” software is built equally. We need to separate the genuine industry shifts from the feature-creep hype.

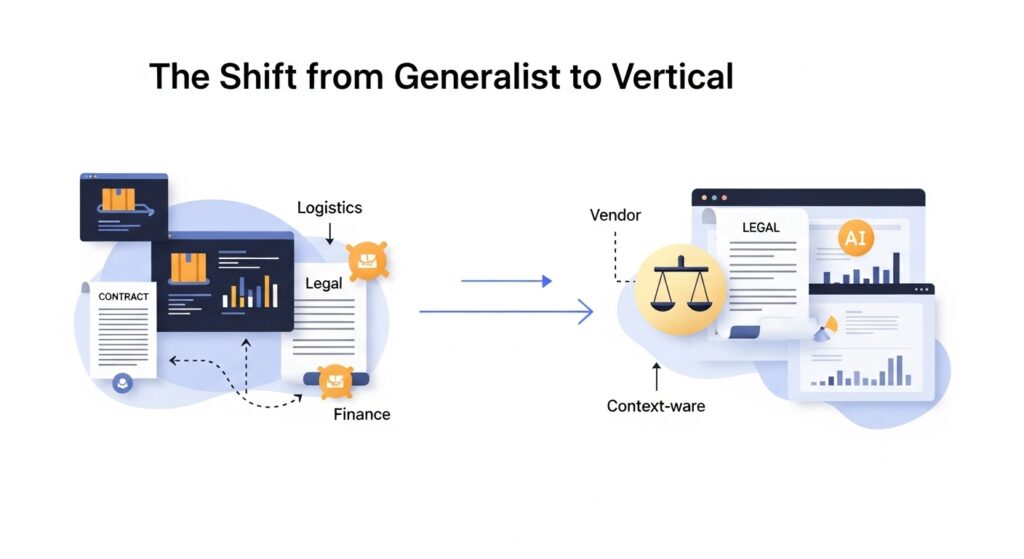

The Shift from Generalist to Vertical

For a long time, the dream of AI SaaS was a single platform that could do everything: write code, file taxes, draft contracts, and manage human resources. It was the Swiss Army Knife approach. But the market reality, supported by real-world data, suggests that generalist AI agents are hitting a plateau. They are impressive, sure, but they lack the specific context of a niche industry.

The current trend, and the one I find most promising, is the rise of vertical SaaS specialized platforms. These are tools built on top of general AI models but tailored to the complex regulatory and operational standards of specific sectors. Think about the difference between using ChatGPT to write a marketing email and using an AI SaaS platform designed specifically for legal firms that understands precedent, contract nuances, and jurisdictional risks.

I recently advised a client in the logistics space who was drowning in supplier data. They implemented an AI logistics platform that didn’t just analyze general text; it understood shipment customs codes, vendor reliability scores, and route optimization based on weight class. That’s where the real value is. This verticalization is essential because it filters out the noise. It makes the AI context-aware, not just probability-aware.

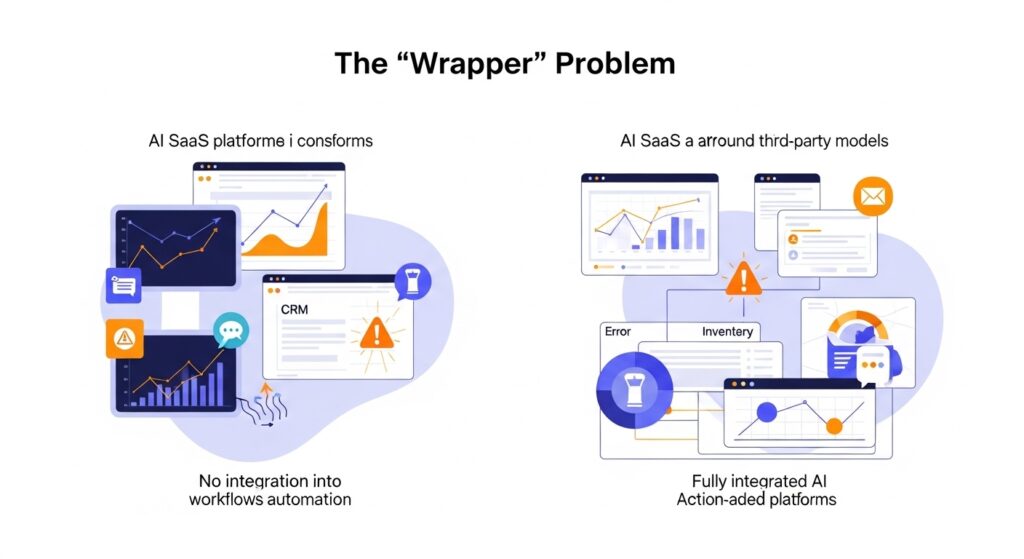

The “Wrapper” Problem

Let’s talk about the elephant in the room: the “wrapper.” There is a significant portion of the current AI SaaS market that is nothing more than a prompt interface wrapped around a third-party model like OpenAI’s GPT-4 or Anthropic’s Claude. These platforms don’t own the data, they don’t own the user relationship, and most importantly, they have no recurring revenue moat once the early adopter frenzy fades.

When evaluating these platforms, I tell clients to look for something called “closed-loop AI.” This means the software doesn’t just generate a response; it acts on it. It modifies a database, updates a CRM, or schedules a meeting. If a tool doesn’t integrate into your workflow and automate a physical action, it’s often just a novelty.

A concrete example of a wrapper failing came to light when a client tried to use a generic AI customer service tool. The bot was fancy, but it couldn’t access their inventory database. It hallucinated stock availability, leading to angry customers and canceled orders. A robust AI SaaS platform doesn’t just hallucinate; it connects.

The Rise of Autonomous Agents

We are seeing the emergence of AI that can plan and execute multi-step tasks without human intervention. This is the evolution of traditional automation like Zapier or Make, which was essentially a series of if-this-then-that triggers. AI agents are different because they can reason through exceptions.

I’ve seen platforms now that can handle the entire “order-to-cash” cycle. The system can receive a purchase order, cross-reference the data against the customer’s credit limit, preventing fraud, invoice them, and even follow up if the invoice isn’t paid, while staying within the brand’s specific tone of voice. This is a massive leap forward. It moves us away from “human-in-the-loop” management to true autonomy. However, this autonomy requires strict governance. If an agent has the power to move money or destroy data, you need confidence intervals and clear permission gates.

The Economic Reality: Margins Are Under Pressure

Here is where the editorial piece gets a bit darker. The hype around AI SaaS often obscures the economic reality. Developing a good AI model is expensive. The inference costs the cost of running those models every time a user clicks a button is increasing. SaaS margins, which used to be healthy (60-80%), are being squeezed.

Many startups are pricing their AI tools at a premium, banking on the “AI tax,” the willingness of companies to pay more for something that offers speed. But this is fragile. If a competitor builds a similar vertical tool for half the price, the premium disappears. I’ve observed a trend where established SaaS companies are slowly reducing their AI pricing to normalize costs, knowing that the underlying utility of the software is often just a better workflow, not magic.

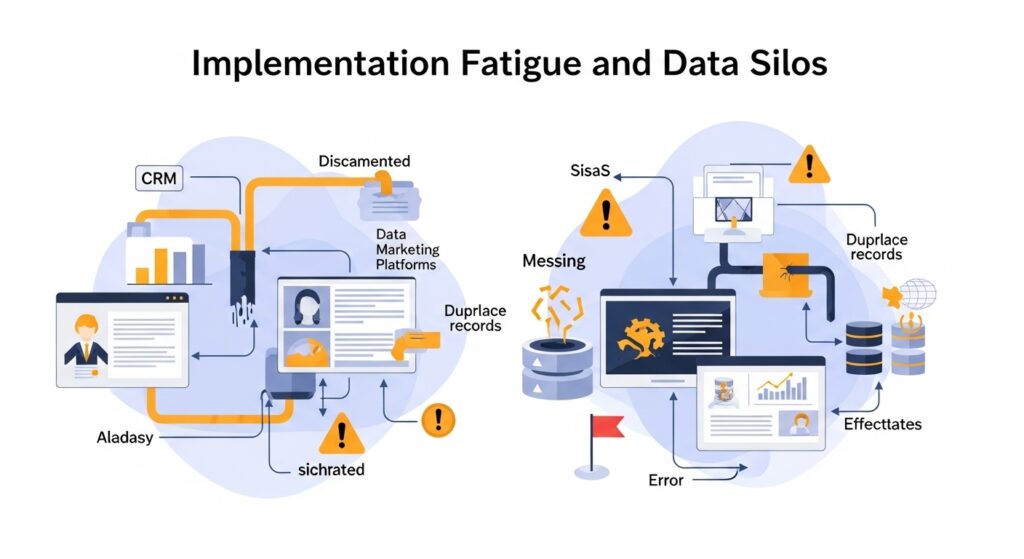

Implementation Fatigue and Data Silos

Despite the promises, AI SaaS platforms struggle with the messiness of real businesses. Most companies still have data silos. Sales data lives in Salesforce, marketing data in HubSpot, and operations data in an old on-premise server. An AI platform sitting in the cloud cannot see the whole picture.

I recently tried to implement an AI analytics platform for a manufacturing firm. The data quality was so poor, with missing fields, inconsistent naming conventions, and duplicate records, that the AI spent 90% of its time flagging errors instead of providing insights. The technology is smart, but the data it consumes must be impeccable. This brings up a critical ethical and practical limitation: AI cannot clean your dirty laundry for you. In fact, it will likely amplify any bias in your historical data.

The Trust Barrier

Trust is the new currency. When using AI SaaS, users are essentially outsourcing decision-making to an opaque model. Why did the AI route this shipment to this specific port? It’s often a “black box.”

Trust-building insights come from explainability. The best platforms I’ve seen allow you to trace the chain of thought. “Why did this agent approve this request?” If you can’t understand the logic, you can’t trust the outcome. This is especially critical in regulated industries like healthcare and finance, where an algorithmic error isn’t just a bug; it’s a liability.

Conclusion: The Software-As-A-Service 2.0

We are moving from Software 1.0 static code to Software 2.0 data-driven code. AI SaaS platforms are the vanguard of this era, but they are currently navigating a minefield of hype, cost, and integration challenges.

The tools that will survive the next two years aren’t the ones with the shiniest demos; they are the verticalized, autonomous, and deeply integrated platforms that solve specific problems without burning a hole in the company’s budget. The goal isn’t to replace humans with bots; it’s to empower your team to be smarter, not just faster. If you’re looking to invest, look for efficiency gains in your workflows, not just feature bells and whistles.

FAQs

What is the difference between SaaS and AI SaaS?

A: Traditional SaaS provides tools for tasks (like accounting or email). AI SaaS incorporates artificial intelligence to automate those tasks, analyze data, and make autonomous decisions, rather than just providing a static interface for the user to operate.

Are AI SaaS platforms a passing trend?

A: While the hype cycle will cool, AI integration is permanent. The transition from “features” to “intelligence” is the new standard. However, the specific “wrapper” startups may struggle, while verticalized, tool-based integrations will likely thrive.

What is “vertical AI SaaS”?

A: This refers to AI platforms tailored to specific industries (e.g., law, construction, logistics) rather than general business tasks. They understand industry-specific jargon, regulations, and workflows, making them much more effective than general chatbots.

What are the risks of using AI SaaS platforms?

A: Key risks include data privacy issues, “hallucinations” (generating false information), high inference costs that erode margins, and algorithmic bias. Additionally, if the AI creates a workflow that is too complex to explain or reverse, it can create operational bottlenecks.

How can I ensure data privacy when using AI SaaS?

A: Always review the vendor’s data usage policy. Ensure that the data is not being used to train third-party models without explicit consent. For sensitive information, look for “zero-retention” policies where data is not stored after processing, though these can sometimes reduce performance.